|

|

|

|

|

|

|

|

|

|

Code [GitHub] |

SIGGRAPH 2022 [Paper] |

Slides [pptx] |

|

|

|

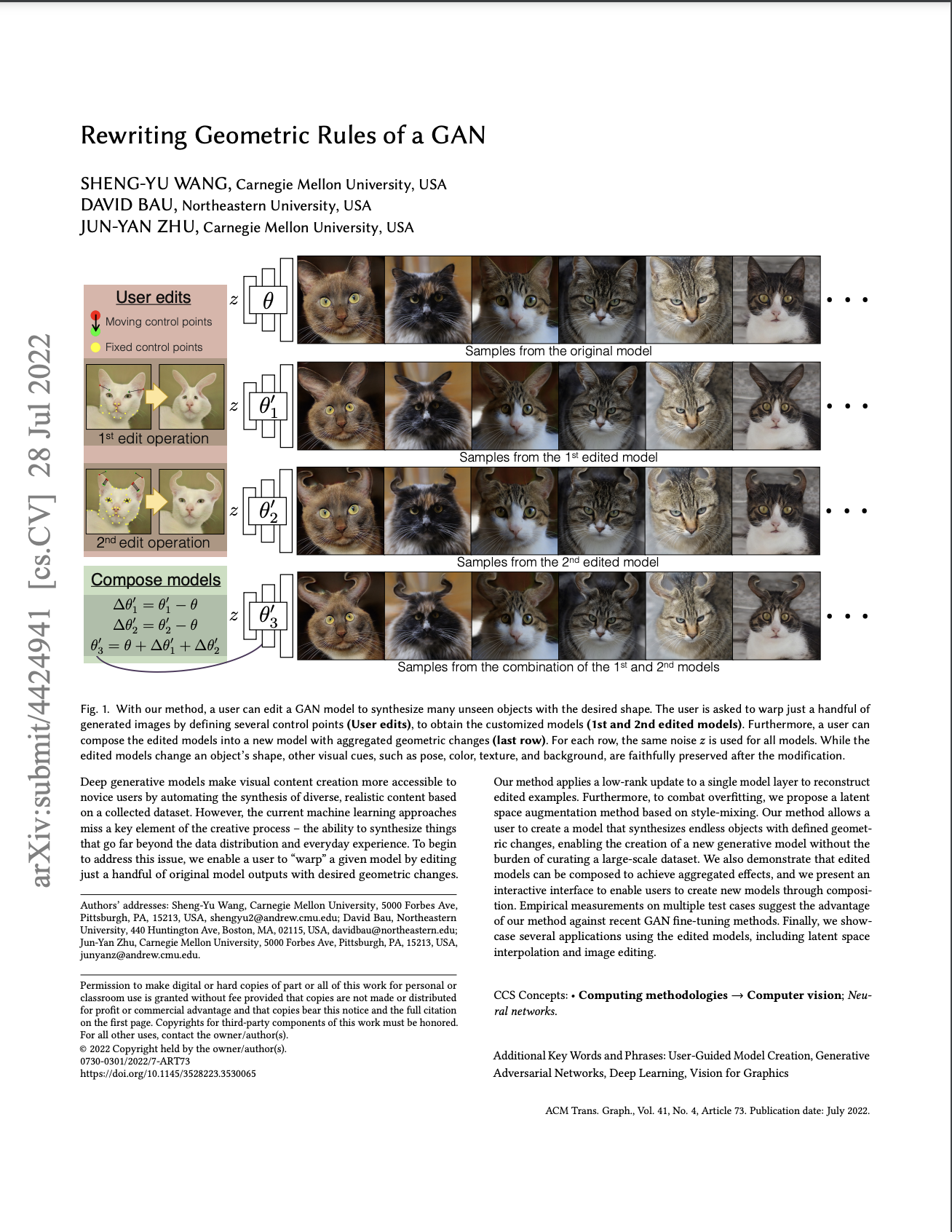

Sheng-Yu Wang, David Bau, Jun-Yan Zhu. Rewriting Geometric Rules of a GAN. In SIGGRAPH, 2022. (Paper) |

|

|

Related worksModel editing:

Low-rank model updates:

Few-shot finetuning:

|

Acknowledgements |