|

Sheng-Yu Wang, David Bau, Jun-Yan Zhu.

Sketch Your Own GAN.

In ICCV, 2021. (Paper)

|

Results

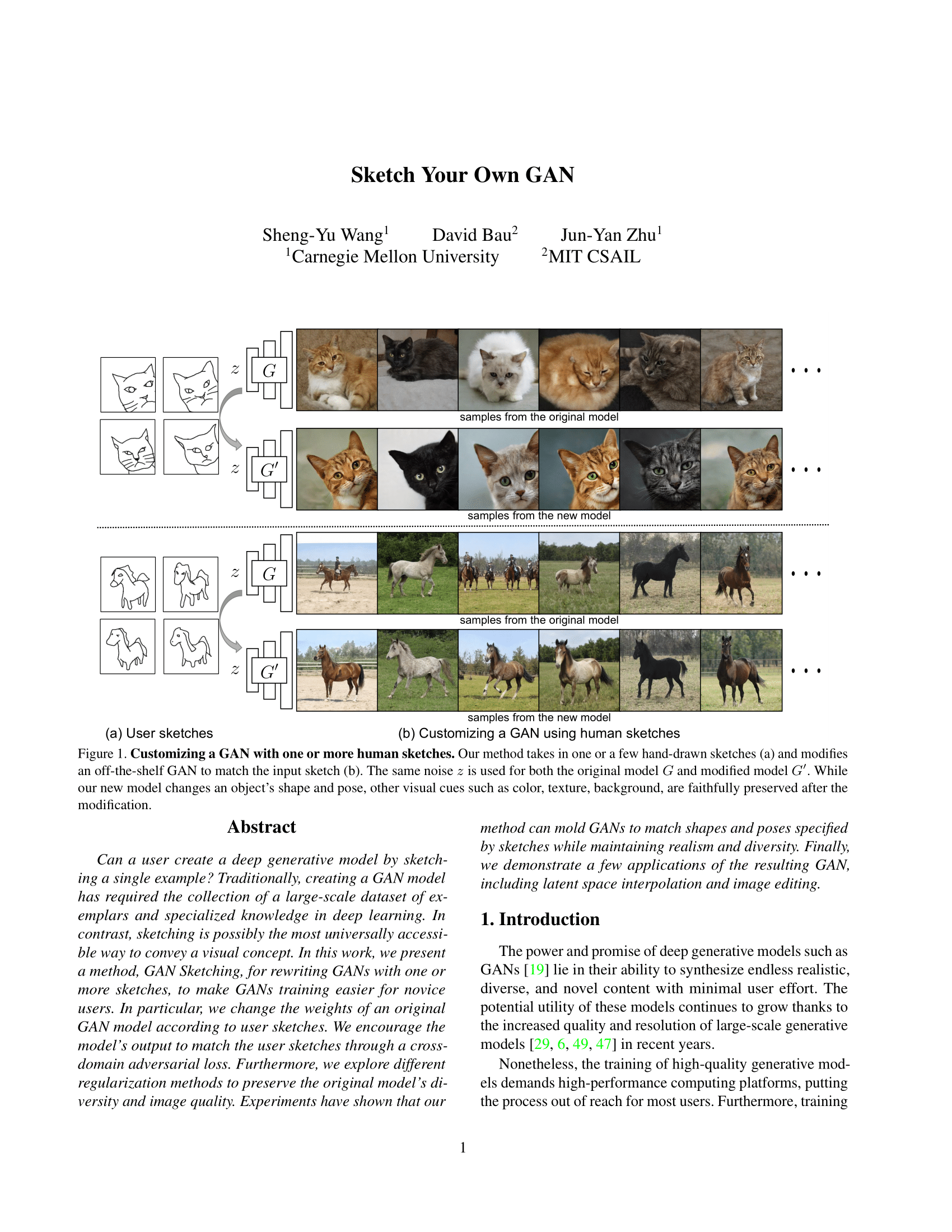

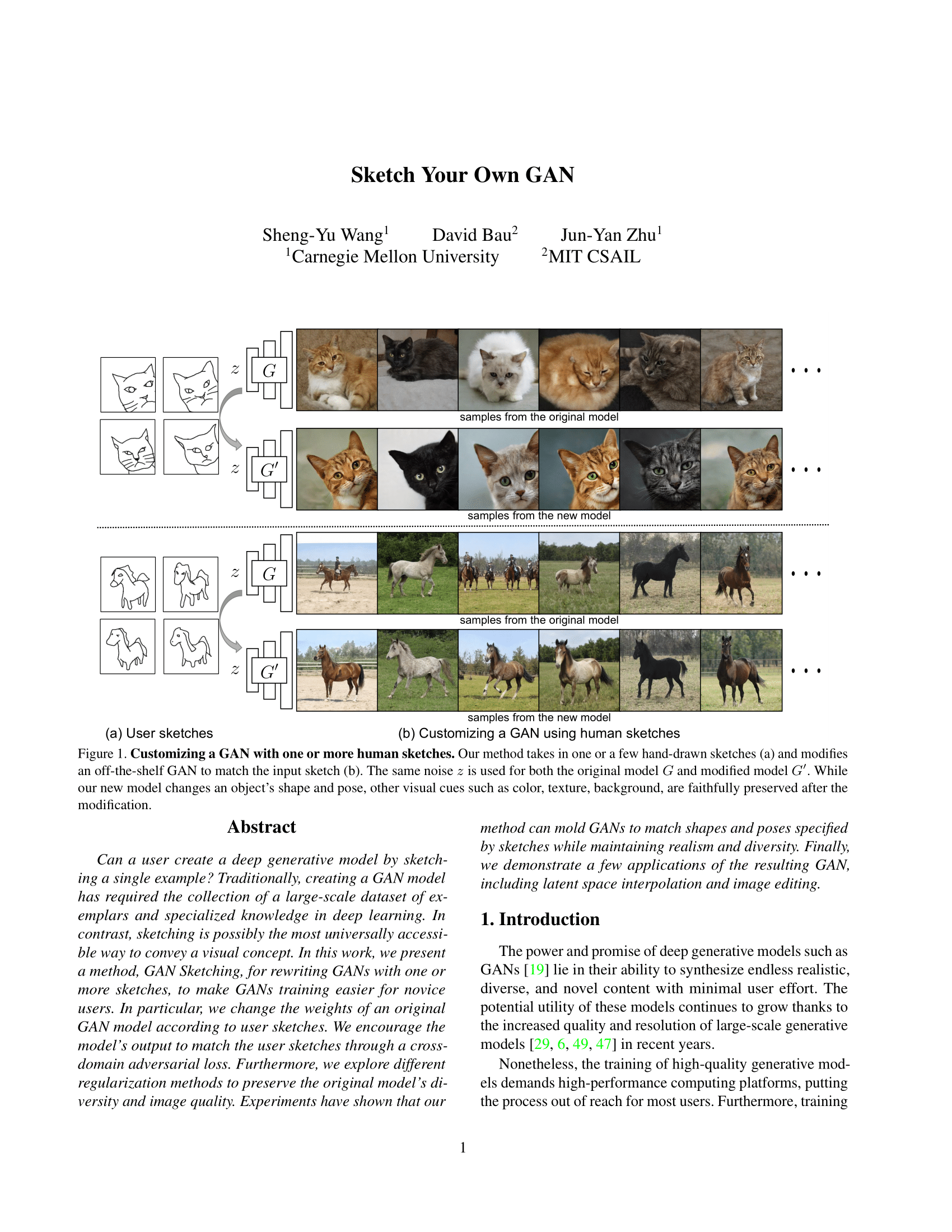

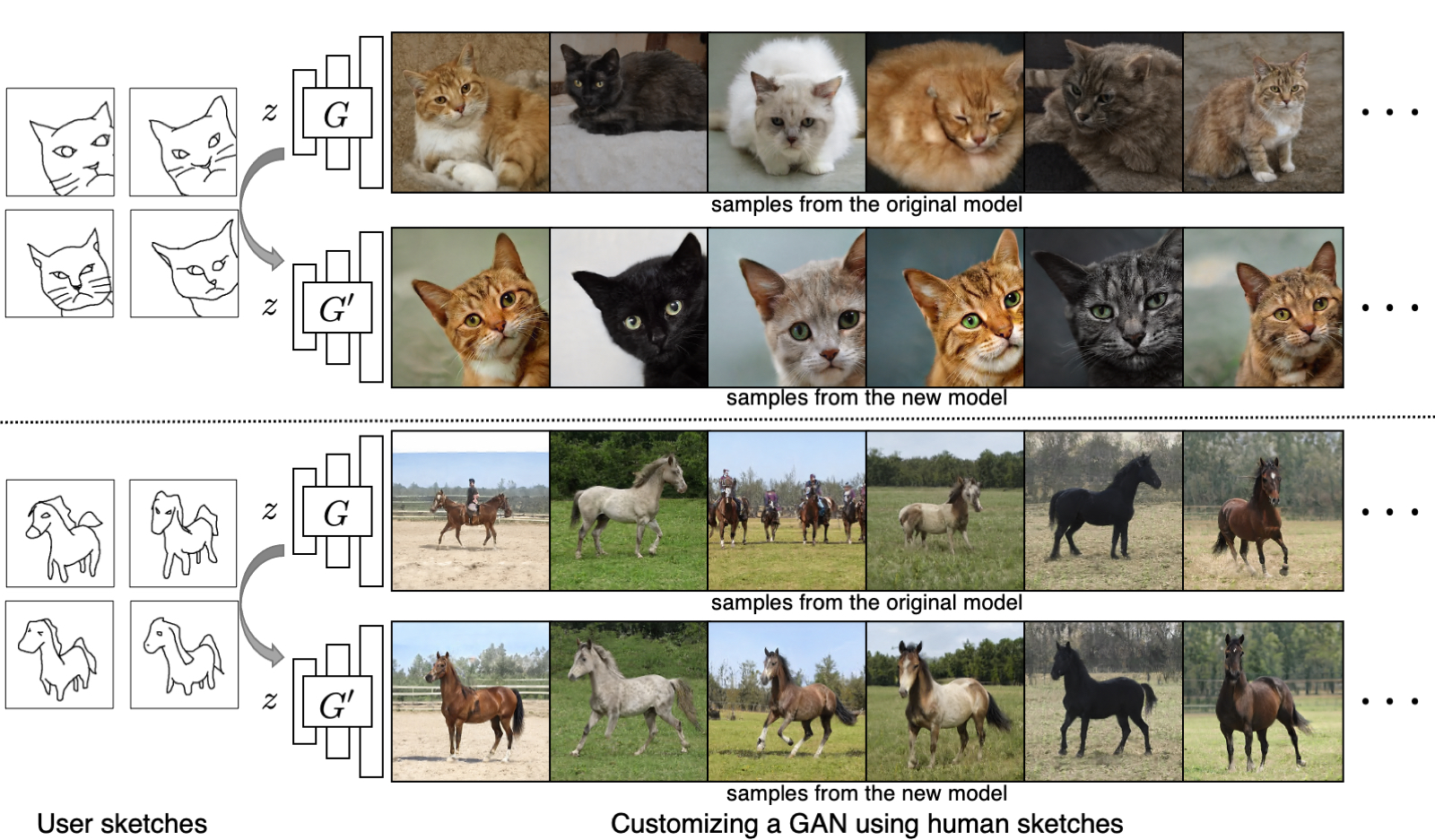

Our method can customize a pre-trained GAN to match input sketches.

Interpolation using our customized models. Latent space interpolation is smooth with our customized models.

Image 1

|

Interoplation

|

Image 2

|

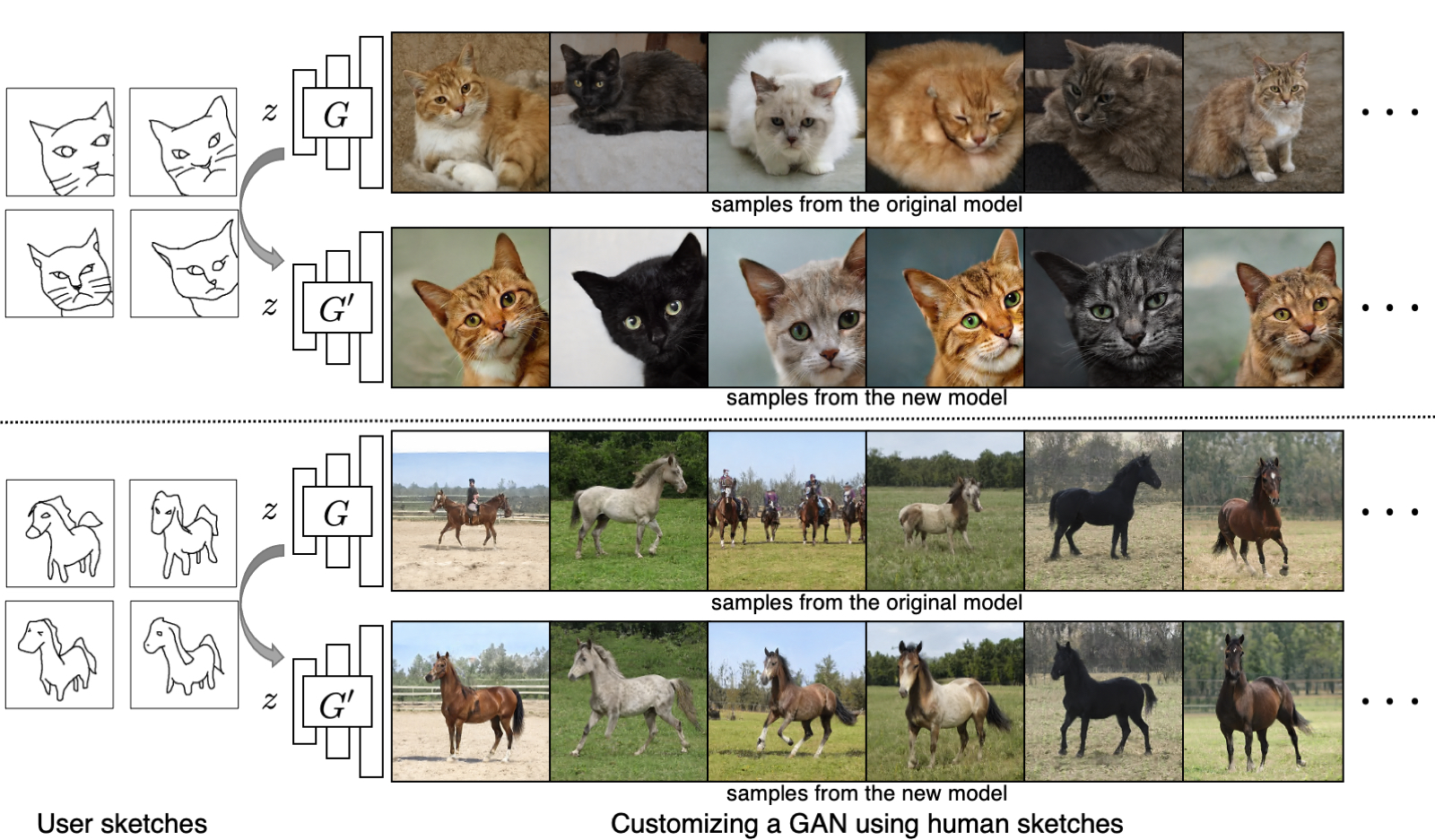

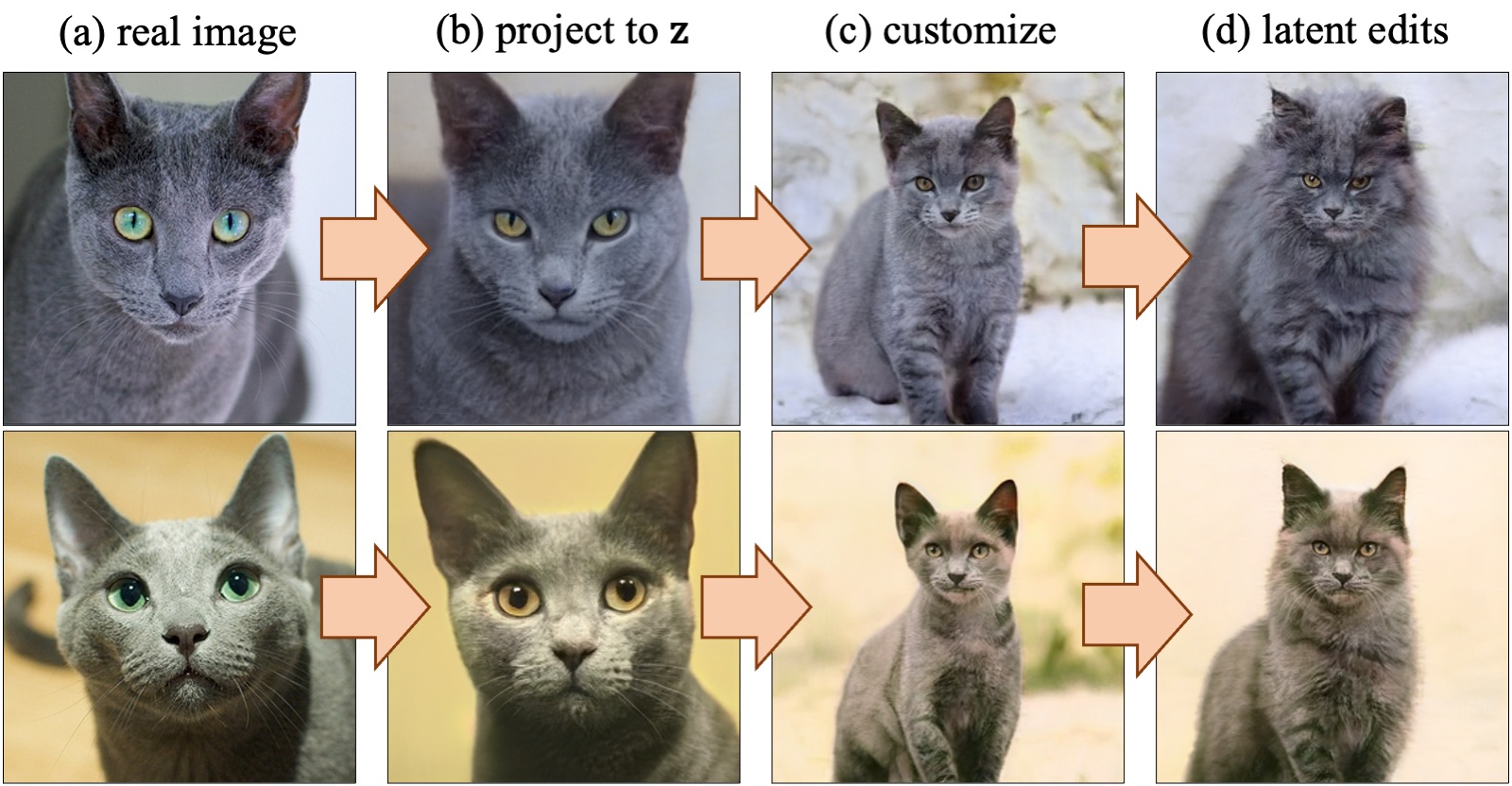

Image editing using our customized models. (a) Given a real image, (b) we project it to the original model's noise z using Huh et al. (c) We feed the projected z to the standing cat model trained on sketches. (d) we edit the image with `add fur` operation using GANSpace.

We can interpolate between the customized model by interpolating the W-latent space.

Model 1

|

Interoplation in W-latent space

|

Model 2

|

We observe similar effect by interpolating the model weights directly.

Model 1

|

Interoplation in the model weight space

|

Model 2

|

Each video shows uncurated samples generated from a model trained on one or more sketch inputs. An example sketch input is shown.

Failure cases

Our method does not work well when sketch inputs depicts a complex pose, or when the sketches are drawn with a distinctive style. The generated images does not match the shapes and poses as faithfully as the previous models.

|

Related works

- R. Gal, O. Patashnik, H. Maron, A. Bermano, G. Chechik, D. Cohen-Or. "StyleGAN-NADA: CLIP-Guided Domain Adaptation of Image Generators.". In ArXiv. (concurrent work)

- D. Bau, S. Liu, T. Wang, J.-Y. Zhu, A. Torralba. "Rewriting a Deep Generative Model". In ECCV 2020.

- Y. Wang, A. Gonzalez-Garcia, D. Berga, L. Herranz, F. S. Khan, J. van de Weijer. "MineGAN: effective knowledge transfer from GANs to target domains with few images". In CVPR 2020.

- M. Eitz, J. Hays, M. Alexa. "How Do Humans Sketch Objects?". In SIGGRAPH 2012.

|

Acknowledgements

We thank Nupur Kumari and Yufei Ye for proof-reading the drafts. We are also grateful for helpful discussions from Gaurav Parmar, Kangle Deng, Nupur Kumari, Andrew Liu, Richard Zhang, and Eli Shechtman. We are grateful for the support of the Naver Corporation, DARPA SAIL-ON HR0011-20-C-0022 (to DB), and Signify Lighting Research. Website template is from Colorful Colorization.

|